Artificial Intelligence needs three things to work: power to run the systems, chips to process the data, and facilities to house it all. The buildout happening now is one of the largest in tech history, with billions flowing into advanced data centers. Inside, you’ll find top-tier GPUs like NVIDIA’s H100 and A100, ultra-fast storage, and high-speed links that connect thousands of servers. New cooling methods, stronger temperature control, and smarter power management are becoming standard features.

This transformation isn’t only about training AI models in massive facilities. Smaller, strategically placed edge data centers are bringing computing power closer to users. That’s improving speed for services like video streaming, mobile apps, IoT devices, and 5G applications. Building and running this infrastructure means ensuring fast, secure connections and reliable monitoring to keep everything running around the clock.

Many of the companies involved in this technology buildout have already generated returns well in excess of the broader equity market. Over recent years, a relatively small group of large-cap technology leaders has accounted for a meaningful share of overall market performance. Companies such as Nvidia and Microsoft have been major drivers of market gains. Several other AI hardware and infrastructure names have also had standout performances.

In this article, we’ll break these opportunities into three clear groups. AI hardware suppliers create the critical components. Hyperscalers operate massive cloud platforms that consume the most AI computing power. Pure-play data center operators provide the physical space, power, and cooling. Together, they form the backbone of today’s AI economy.

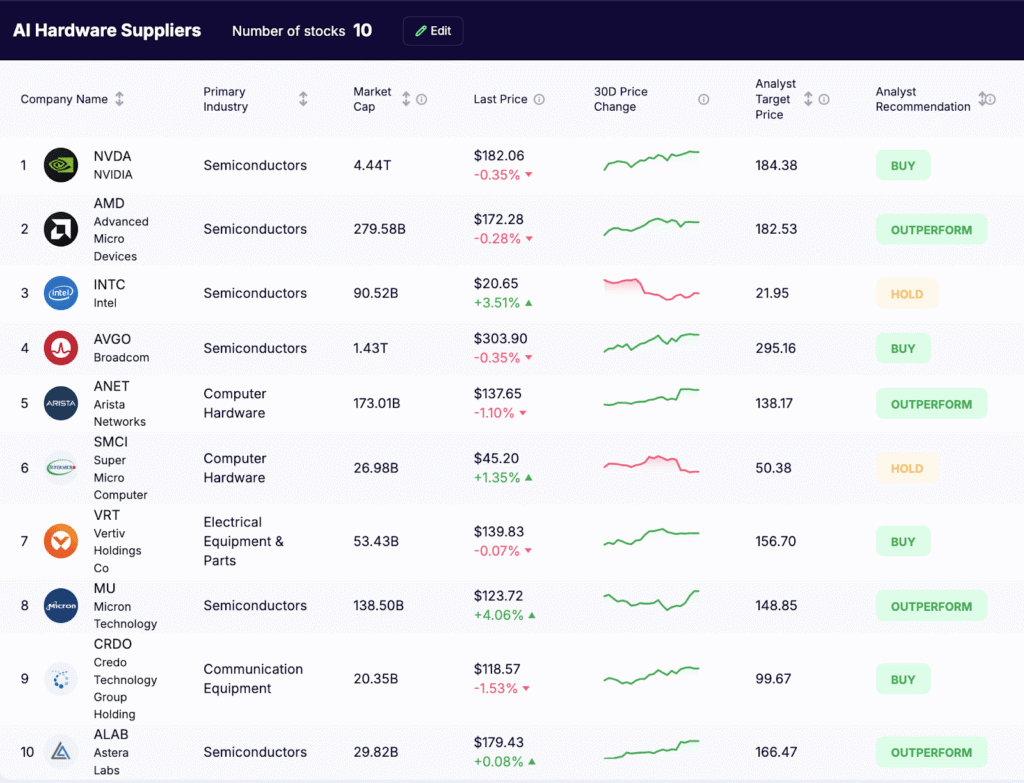

1) AI Hardware Suppliers

These companies provide chips, connectivity, cooling, servers, and storage that enable high-performance computing. They sell into cloud providers, colocation providers, and large enterprises modernizing information technology management.

- NVIDIA (NASDAQ:NVDA)

Designs the accelerators powering much of the AI market. Current fleets rely heavily on the H100 and A100 GPUs, while production of its new Blackwell-based B200 series are ramping. NVIDIA InfiniBand continues to dominate large-cluster interconnects, though the company is also expanding Ethernet-based options for broader adoption. - Advanced Micro Devices (NASDAQ:AMD)

Growing its AI data center presence with MI300 series accelerators, which have secured multiple hyperscaler design wins. AMD is preparing its next-gen MI400 platform for 2026 to maintain competitiveness against NVIDIA’s Blackwell. - Intel (NASDAQ:INTC)

Supplying Xeon CPUs for AI inference and Gaudi 2/3 accelerators for training workloads. Intel is using its foundry services to win manufacturing deals in AI-related semiconductors, positioning itself as both a supplier and a contract manufacturer. - Broadcom Inc. (NASDAQ:AVGO)

Provides high-speed networking chips and custom silicon critical for AI workloads. Its Jericho3-AI switch platform and custom ASICs for hyperscalers are gaining traction as AI data center traffic requirements soar. - Arista Networks (NYSE:ANET)

Leads in high-throughput Ethernet switching for AI clusters. Recent revenue growth reflects strong demand from hyperscale customers building out GPU fabrics. - Super Micro Computer (NASDAQ:SMCI)

Specializes in quick-turn AI-optimized servers. August 2025 saw SMCI announce expanded manufacturing capacity to handle orders for Blackwell-based systems and AMD MI300 servers. - Vertiv (NYSE:VRT)

Key provider of liquid cooling, HVAC systems, and power distribution units for high-density AI deployments. Vertiv continues to see record orders as more operators adopt liquid cooling technology for AI racks. - Pure Storage (NYSE:PSTG)

Delivers high-performance flash storage solutions that feed GPUs without bottlenecks. Its FlashBlade and FlashArray lines remain popular in AI training clusters for handling large, unstructured datasets. - Micron Technology (NASDAQ:MU)

Supplies high-bandwidth memory (HBM3E) and NAND for AI workloads. Demand for Micron’s AI memory products remains elevated as GPU makers push for more on-package bandwidth. - Credo Technology (NASDAQ:CRDO)

Designs active electrical cables and SerDes solutions for extending PCIe and CXL connections, enabling larger GPU clusters without sacrificing performance. - Astera Labs (NADAQ:ALAB)

Produces CXL connectivity chips, PCIe retimers, and fabric switches for rack-scale AI systems. Its solutions help manage memory pooling and disaggregation in AI data centers.

Why it matters: Every extra token generated by AI algorithms depends on this layer. The more clusters scale, the more demand for cooling, optics, connectivity silicon, and memory.

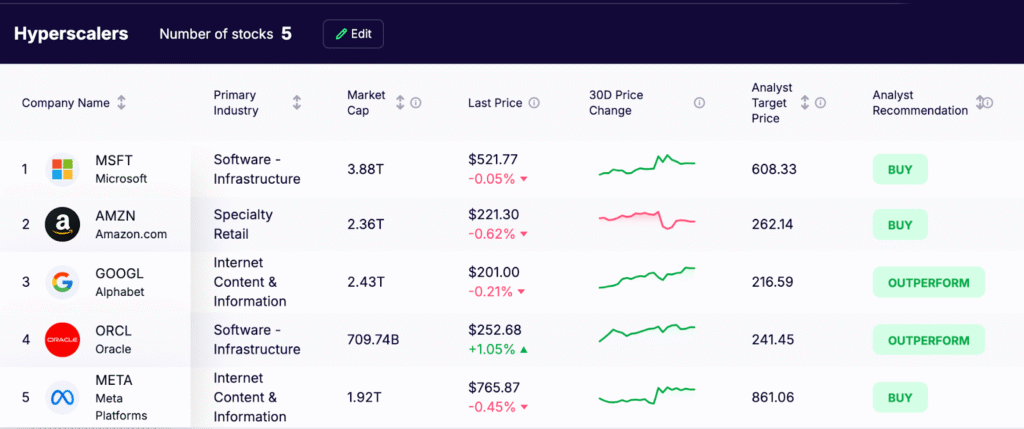

2) Hyperscalers

These platforms deploy new capacity first, set performance targets, and shape vendor roadmaps. Their capex decisions ripple through digital technologies and enterprise digital transformation.

- Microsoft (NASDAQ:MSFT)

Microsoft continues to expand Azure’s AI capacity across multiple regions. The company has significantly increased capital spending in 2025 and ind is expected to maintain elevated investment levels through 2026–2028, with a large portion directed toward AI-focused data centers in the United States. Azure’s AI-related growth remains a key contributor to Microsoft’s overall revenue gains this year. - Amazon (NASDAQ:AMZN)

Amazon Web Services (AWS) offers large-scale AI infrastructure powered by both NVIDIA GPUs and its own custom chips, Trainium and Inferentia. The newer Inferentia2 chips are designed to improve efficiency and lower costs for AI workloads. AWS operates some of the largest GPU clusters available for training advanced models. - Alphabet (NASDAQ:GOOGL)

Google Cloud continues to expand its AI capabilities using its proprietary Tensor Processing Units (TPUs). The latest TPU generation, Ironwood, is designed for faster AI inference and higher memory capacity, helping to support a broad range of enterprise AI applications. - Oracle (NYSE:ORCL)

Oracle Cloud Infrastructure (OCI) has increased its AI computing capacity with clusters built on NVIDIA and AMD GPUs. The company offers high-performance instances and tools aimed at large enterprises adopting AI for data analysis, automation, and cloud-based workloads. - Meta Platforms (NASDAQ:META)

Meta is building new AI data centers to support its Llama AI models and other machine learning projects. These facilities are designed to handle very large GPU deployments and provide the computing power needed for both research and large-scale user applications.

Performance context: Nvidia and Microsoft were outsized drivers of 2025 index gains, highlighting how hyperscaler and chip momentum influences the S&P 500.

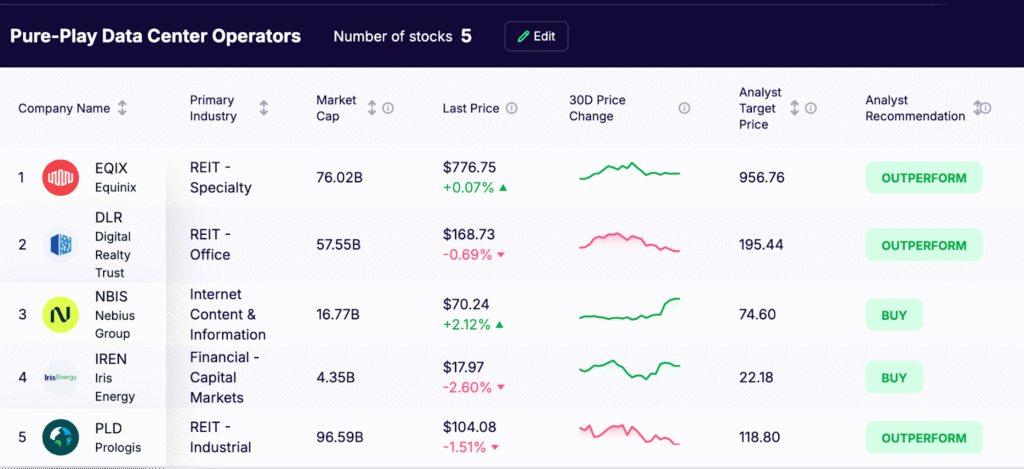

3) Pure-Play Data Center Operators

These firms secure power, land, and fiber, then deliver data center solutions for AI tenants. Think long lead times, complex permits, and deep security standards.

- Equinix (NASDAQ:EQIX)

Equinix operates one of the largest global networks of interconnected data centers. Its facilities link directly to major cloud platforms, which helps reduce latency and improve reliability for AI workloads. The company continues to expand its footprint in key markets to meet growing demand for high-density deployments. - Digital Realty (NYSE:DLR)

Digital Realty runs large-scale data center campuses designed for hyperscale and AI customers. It is upgrading sites to support higher power density, liquid cooling, and advanced power distribution, which are increasingly necessary for GPU-intensive applications.

Nebius Group (NASDAQ:NBIS)

Nebius operates AI-focused cloud and data center infrastructure and is listed on Nasdaq. The company has reported strong revenue growth in 2025 and is working to secure additional large-scale power capacity to expand its AI services.cale. - Iris Energy (NASDAQ:IREN)

Iris Energy is adapting its existing power infrastructure (originally used for cryptocurrency mining) to host AI GPU clusters. It has ordered new NVIDIA Blackwell GPUs to support its move into AI cloud hosting. - Prologis (NYSE:PLD)

Prologis is a major industrial real estate owner and developer. In addition to its logistics properties, it is working with partners on select data center projects, including a planned conversion in Chicago that would add significant power capacity. While not a traditional data center operator, these projects mark a growing area of interest for the company.

Pure-play data center operators are essential for scaling AI workloads beyond the walls of hyperscalers. They control the power, space, and connectivity needed to run the most advanced AI models, making them key beneficiaries of the current infrastructure boom. As demand for high-density capacity and low-latency interconnection grows, these companies are well positioned to capture new business from both cloud providers and enterprises deploying AI at scale.

What To Watch Next

1. Power supply constraints will be a defining competitive factor.

As AI data centers transition toward rack power densities exceeding 80–100 kW, securing stable and affordable electricity is emerging as the single biggest gating factor for growth. Operators with long-term utility contracts, direct access to renewable energy sources, or integration of on-site generation (including fuel cells and small modular nuclear designs) will have a structural cost advantage. Grid congestion and permitting delays in high-demand regions, such as Northern Virginia and parts of Texas, are already pushing hyperscalers and colocation providers to explore new geographies. The winners will be those that can bring capacity online quickly without compromising energy pricing stability.

2. Thermal management is evolving into a strategic discipline.

The increase in GPU power consumption, especially with the introduction of NVIDIA Blackwell and next-gen AMD MI400 accelerators, has pushed many facilities beyond the practical limits of traditional air cooling. Liquid cooling technology (including direct-to-chip cold plate systems and immersion cooling) is no longer experimental; it’s becoming a baseline requirement for high-density deployments. Forward-thinking operators are pairing these systems with advanced HVAC optimization and AI-driven thermal monitoring to reduce operational costs and improve equipment lifespan. Over the next 18–24 months, adoption speed and integration efficiency will differentiate leaders from laggards.

3. Network interconnection is an enduring moat in the AI era.

For large-scale AI training and inference, proximity to major communication networks and dense ecosystems of cloud and enterprise peers is as critical as raw compute power. Rich fiber interconnection assets, private cloud on-ramps, and low-latency metro connectivity can make the difference between a competitive AI deployment and an underperforming one. This advantage compounds over time – platforms like Equinix Fabric or Digital Realty’s Service Exchange benefit from network effects, attracting more tenants and increasing switching costs for customers. In practice, the best-connected data centers allow AI operators to move petabytes of training data efficiently, interlink hybrid cloud environments, and shorten model deployment cycles.

Bottom line: The next phase of AI infrastructure leadership will be determined not only by who can build the most data center space, but by who can secure scalable power, manage thermals efficiently, and create interconnection ecosystems that give customers long-term performance and cost advantages.

Quick FAQ

Q: Are hyperscalers in the same business as colocation providers?

A: No. Hyperscalers build and run their own clouds. Colocation focuses on selling space, power, and interconnection to many customers.

Q: Is Prologis a data center pure play?

A: Not today. It is an industrial REIT developing select data center projects with partners.

Q: What role do Credo and Astera Labs play?

A: Credo primarily provides connectivity solutions for high-speed data transfer within and between data centers, and Astera Labs focuses on connectivity chips that enable CXL for memory expansion and pooling.

Q: Why is Iris Energy relevant?

A: It is repurposing power-rich sites to host GPUs and has disclosed new orders to expand AI capacity.

Q: Does interconnection really matter?

A: Yes. Without robust interconnection the building is a warehouse with servers. AI depends on fast, resilient pathways between clouds, partners, and users.

Key Takeaways

AI infrastructure is best understood as three interconnected ecosystems – hardware suppliers, hyperscalers, and pure-play data center operators – each with distinct growth drivers, investment cycles, and competitive pressures. Strength in one group can offset slowdowns in another.

Power and cooling capacity are now as critical to competitiveness as chip performance. Projects can be delayed or scaled back if operators cannot secure stable energy contracts or deploy advanced thermal management, even when hardware demand is strong.

Leading AI infrastructure stocks outperformed the S&P 500 in 2026, underscoring that exposure to this segment has already become a significant driver of equity market returns.

Diversification across the three categories can reduce reliance on any single technology cycle. For example, during pauses in chip upgrade demand, hyperscalers and data center REITs may still grow on the back of multi-year contracts and committed power expansions.

High-quality interconnection ecosystems are emerging as a competitive moat. Dense fiber networks, cloud on-ramps, and rich peer ecosystems can enhance AI workload efficiency as much as hardware upgrades.

Long-term winners are likely to be companies with secure, scalable access to clean or low-cost power. Integration of renewable energy, fuel cells, or other stable power sources will be essential to overcome the persistent bottleneck of power supply constraints in scaling generative AI.